AI AND THE FUTURE OF NEWS: What Does the Public in Six Countries Think of Generative AI in News? | Reuters Institute

Artificial Intelligence

|

Warning: Undefined array key 0 in /home/dmc/public_html/wp-content/themes/DMC/single-report.php on line 21

Warning: Attempt to read property "name" on null in /home/dmc/public_html/wp-content/themes/DMC/single-report.php on line 21

Introduction

The public launch of OpenAI’s ChatGPT in November 2022 and subsequent developments have spawned huge interest in generative AI. Both the underlying technologies and the range of applications and products involving at least some generative AI have developed rapidly (though unevenly), especially since the publication in 2017 of the breakthrough ‘transformers’ paper (Vaswani et al. 2017) that helped spur new advances in what foundation models and Large Language Models (LLMs) can do.

These developments have attracted much important scholarly attention, ranging from computer scientists and engineers trying to improve the tools involved, to scholars testing their performance against quantitative or qualitative benchmarks, to lawyers considering their legal implications. Wider work has drawn attention to built-in limitations, issues around the sourcing and quality of training data, and the tendency of these technologies to reproduce and even exacerbate stereotypes and thus reinforce wider social inequalities, as well as the implications of their environmental impact and political economy.

One important area of scholarship has focused on public use and perceptions of AI in general, and generative AI in particular (see, for example, Ada Lovelace Institute 2023; Pew 2023). In this report, we build on this line of work by using online survey data from six countries to document and analyze public attitudes towards generative AI, its application across a range of different sectors in society, and, in greater detail, in journalism and the news media specifically.

We go beyond already published work on countries including the USA (Pew 2023; 2024), Switzerland (Vogler et al. 2023), and Chile (Mellado et al. 2024), both in terms of the questions we cover and specifically in providing a cross-national comparative analysis of six countries that are all relatively privileged, affluent, free, and highly connected, but have very different media systems (Humprecht et al. 2022) and degrees of platformisation of their news media system in particular (Nielsen and Fletcher 2023).

The report focuses on the public because we believe that – in addition to economic, political, and technological factors – public uptake and understanding of generative AI will be among the key factors shaping how these technologies are being developed and used, and what they, over time, will come to mean for different groups and different societies (Nielsen 2024).

There are many powerful interests at play around AI, and much hype – often positive salesmanship, but sometimes wildly pessimistic warnings about possible future risks that might even distract us from already present issues. But there is also a fundamental question of whether and how the public at large will react to the development of this family of products.

Will it be like blockchain, virtual reality, and Web3? All promoted with much bombast but little popular uptake so far. Or will it be more like the internet, search, and social media – hyped, yes, but also quickly becoming part of billions of people’s everyday media use.

To advance our understanding of these issues, we rely on data from an online survey focused on understanding if and how people use generative AI, and what they think about its application in journalism and other areas of work and life.

In the first part of the report, we present the methodology, then we go on to cover public awareness and use of generative AI, expectations for generative AI’s impact on news and beyond, how people think AI is being used by journalists right now, and how people think about how journalists should use generative AI, before offering a concluding discussion.

As with all survey-based work, we are reliant on people’s own understanding and recall. This means that many responses here will draw on broad conceptions of what AI is and might mean, and that, when it comes to generative AI in particular, people are likely to answer based on their experience of using free-standing products explicitly marketed as being based on generative AI, like ChatGPT.

Most respondents will be less likely to be thinking about incidents where they may have come across functionalities that rely in part on generative AI, but do not draw as much attention to it – a version of what is sometimes called ‘invisible AI’ (see, for example, Alm et al. 2020). We are also aware that these data reflect a snapshot of public opinion, which can fluctuate over time.

We hope the analysis and data published here will help advance scholarly analysis by complementing the important work done on the use of AI in news organisations (for example, Beckett and Yaseen 2023; Caswell 2024; Diakopoulos 2019; Diakopoulos et al 2024; Newman 2024; Simon 2024), including its limitations and inequities (see, for example, Broussard 2018, 2023; Bender et al. 2021), and help centre the public as a key part of how generative AI will develop and, over time, potentially impact many different sectors of society, including journalism and the news media.

Dive deeper into our comprehensive analysis of public attitudes towards generative AI and its implications across various sectors, including journalism and the news media. Discover detailed insights from our cross-national comparative study and understand how these technologies are shaping the future.

Download the Full Report to stay informed and ahead in the evolving landscape of generative AI.

The Table of Contents of “AI AND THE FUTURE OF NEWS: What Does the Public in Six Countries Think of Generative AI in News?” Report :

- About the Authors

- Acknowledgments

- Executive Summary and Key Findings

- Introduction

- Methodology

- 1. Public Awareness and Use of Generative AI

- 2. Expectations for Generative AI’s Impact on News and Beyond

- 3. How People Think Generative AI is Being Used by Journalists Right Now

- 4. What Does the Public Think About How Journalists Should Use Generative AI?

- Conclusion

- References

Number of Pages:

- 42 pages

Pricing:

- Free

Methodology

The report is based on a survey conducted by YouGov on behalf of the Reuters Institute for the Study of Journalism (RISJ) at the University of Oxford. The main purpose is to understand if and how people use generative AI, and what they think about its application in journalism and other areas of work and life.

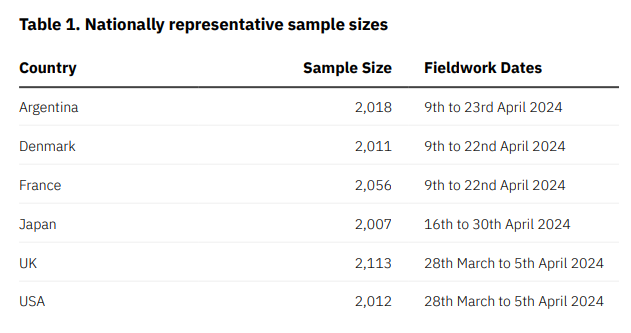

The data were collected by YouGov using an online questionnaire fielded between 28 March and 30 April 2024 in six countries: Argentina, Denmark, France, Japan, the UK, and the USA.

YouGov was responsible for the fieldwork and provision of weighted data and tables only, and RISJ was responsible for the design of the questionnaire and the reporting and interpretation of the results.

Samples in each country were assembled using nationally representative quotas for age group, gender, region, and political leaning. The data were weighted to targets based on census or industry-accepted data for the same variables.

Sample sizes are approximately 2,000 in each country. The use of a non-probability sampling approach means that it is not possible to compute a conventional ‘margin of error’ for individual data points. However, differences of +/- 2 percentage points (pp) or less are very unlikely to be statistically significant and should be interpreted with a very high degree of caution. We typically do not regard differences of +/- 2pp as meaningful, and as a general rule, we do not refer to them in the text.

It is important to note that online samples tend to under-represent the opinions and behaviors of people who are not online (typically those who are older, less affluent, and have limited formal education). Moreover, because people usually opt into online survey panels, they tend to over-represent people who are well-educated and socially and politically active.

Some parts of the survey require respondents to recall their past behavior, which can be flawed or influenced by various biases. Additionally, respondents’ beliefs and attitudes related to generative AI may be influenced by social desirability bias, and when asked about complex socio-technical issues, people will not always be familiar with the terminology experts rely on or understand the terms the same way. We have taken steps to mitigate these potential biases and sources of error by implementing careful questionnaire design and testing.

Warning: Undefined array key "sidebar_ads" in /home/dmc/public_html/wp-content/themes/DMC/functions/helpers.php on line 824