Twitter Re-Launches Test of Warnings on Potentially Harmful Tweet Replies

Amy Harrison

|

/home/dmc/public_html/wp-content/themes/DMC/single-news.php on line 24

https://www.digitalmarketingcommunity.com/news/?newstype=">Branding

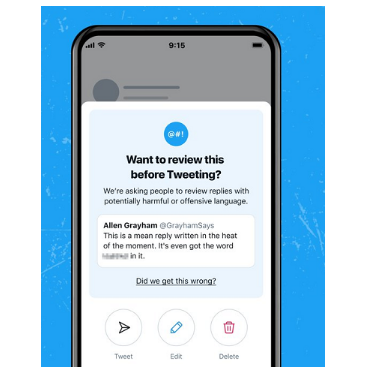

Last May, Twitter launched a limited test of warning prompts on tweet replies containing potentially offensive remarks. However, Twitter is now relaunching the alerts, along with a new format, besides further explanation as to why each reply has been flagged.

In light of Twitter’s warning prompts, as you can see above, the new alerts explain that Twitter is ‘asking people to review replies with potentially harmful or offensive language’. Moreover, you then have three large buttons below the prompt – you can either tweet it anyway, edit your response, or bin it instead.

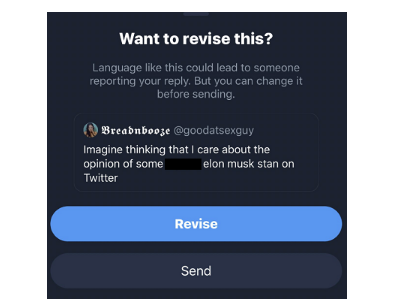

The format has changed significantly since the original launch, which was a far more basic prompt.

Moreover, Twitter updated the format in August, before shelving the test during the peak of the US election campaign. However, as we see now, with the chaos of the period behind us, Twitter’s trying out the warning prompts once again, not only that but also users on iOS now set to get the alerts if Twitter detects any potentially offensive terms in their replies.

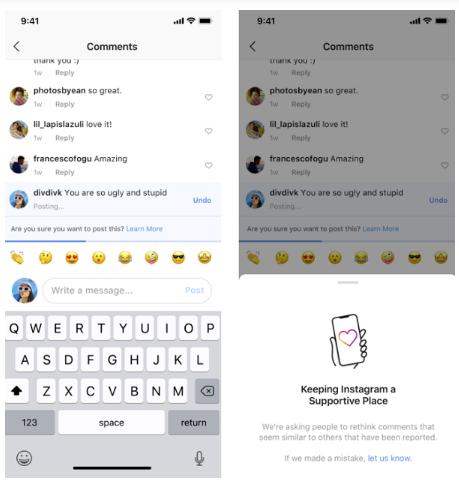

We can say that the system is similar to Instagram’s automated alerts on potentially offensive comments, which Instagram released in July 2019.

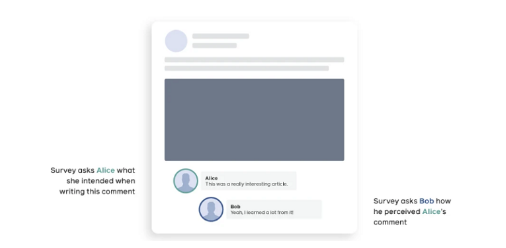

Those prompts have actually helped reduce one of the key causes of friction in online interaction – which is not intentional offense, but misinterpretation. Last year, a research report was published by Facebook which found that misinterpretation was the main element in causing more angst and argument among Facebook users.

Additionally, by prompting users to simply re-assess their language, many online disputes could likely be avoided entirely – and that’s likely even more true on Twitter, where condensing your thoughts into 280 characters can sometimes lead to unintended messaging.

Moreover, Twitter has seen success with other prompts that make users take a moment to re-assess what they’re tweeting.

Twitter’s similar alerts on articles that users attempt to retweet without actually opening the article link have to lead to people opening articles 40% more often after seeing the prompt, and reduced blind retweets significantly. Twitter has also tried other variations of the same, including alerts on content disputed by fact-checkers.

Not only that but also Twitter has tried other variations of the same, including alerts on content disputed by fact-checkers. Simple, additional steps like this can actually give users a moment of pause to re-think their actions, also that may be all that’s needed to reduce unnecessary aggression.

It’s one of the different measures that Twitter’s trying as it continues to focus on improving platform health, besides ensuring more positive interactions within the app. Those efforts have seen Twitter drive important improvements over the last few years. It still has a way to go on this front, however, it’s good to see the platform continuing to test out new ideas.

Warning: Undefined array key "sidebar_ads" in /home/dmc/public_html/wp-content/themes/DMC/functions/helpers.php on line 824