Automated Fake Account Detection Comes to The LinkedIn Platform

Protecting users’ data and preventing data theft has always been an important responsibility and complicated mission, which rests on the shoulders of all social media platforms around the world specially after the recent abuse of data scandals. That’s why recently, great efforts were observed from most of social media apps including Facebook, Twitter, and Instagram to remove fake accounts, stop the spread of false news, and prevent abuse across their platforms.

LinkedIn is one of those social media apps, taking effective steps accordingly, as they aim to prevent misuse of its users’ job history and contact information in sourcing business leads. Hence, a few days ago, LinkedIn announced on its official blog that the LinkedIn Anti-Abuse team is rolling out automated detection systems, which facilitates fighting fake accounts, in addition to protecting LinkedIn members from activity by Impersonators.

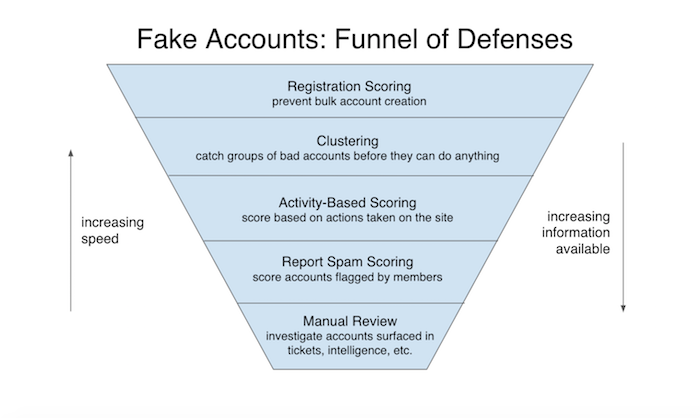

In maintaining a safe and trusted professional community on LinkedIn, the LinkedIn Anti-Abuse team employ a funnel of defenses, which help them to identify and block Fake profiles that can be used to carry out many different types of abuse: scraping, spamming, fraud, and phishing, among others. This funnel of defenses is considered to be countermeasures against different types of attacks, which focus on underlining the majority of fake accounts as quickly as possible to prevent harm to the LinkedIn users.

This previous graph explains the LinkedIn Funnel of defenses’ work levels, as reported by LinkedIn.

“At the top of the funnel is the first line of defense: registration scoring. For many types of abuse, attackers require a large number of fake accounts for the attack to be financially feasible. Thus, in order to proactively stop fake accounts at scale, we have machine-learned models to detect groups of accounts that look or act similarly, which implies they were created or controlled by the same bad actor.”

As shown in this graph, LinkedIn will use a machine-learned model to evaluate every new user registration attempt, which will be divided automatically into three levels to take a decision about it. And, these levels will be:

- Signup attempts with a low abuse risk score: will be allowed to register right away.

- Attempts with a high abuse risk score: will be prevented from creating an account on the LinkedIn platform.

- Attempts with medium risk scores: This kind of signup will be challenged the LinkedIn security measures to verify that they are real people or not.

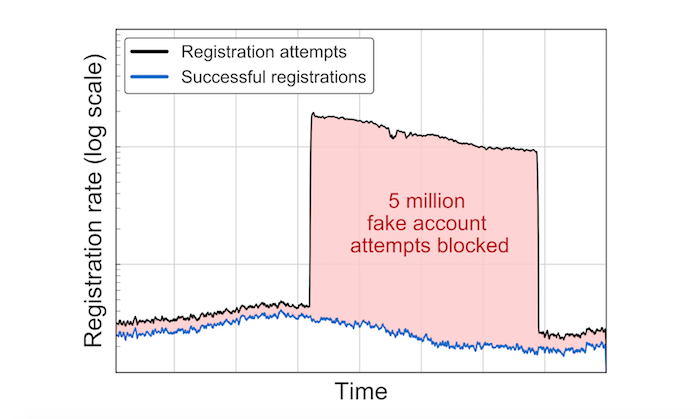

As reported by LinkedIn, this process model proves success in preventing bulk fake account creation, as through it LinkedIn can block five million fake people from creating fake accounts on the platform within a single day. Here’s the visual which shows this result: